Finally, Text-to-Image That Works!

Digging into OpenAI's groundbreaking new image model

If you’ve been reading my Substack for a while, you know I like to illustrate each post with AI-generated images. It’s amazing technology, but it’s often pretty frustrating. Weird artifacts frequently occur, especially strange writing, and when I give specific instructions like “don’t include any writing,” the model often does the opposite. Often, if my first couple of images aren't good, it's better to just start a new chat and try again, then to attempt to coax it into giving me the correct image.

But last week, that all changed. The latest image generation capabilities built into ChatGPT-4o are a major breakthrough. As you’ll see below, it’s an entirely different approach, and calling it the same thing as the earlier model undercuts what a huge shift this is.1 (Note that it’s currently available in ChatGPT Pro and ChatGPT Plus subscriptions, but it is expected to roll out to ChatGPT Enterprise soon.)

It can handle text perfectly. It can iterate based on feedback. It makes realistic images where humans have five fingers. It’s exactly what we’ve all wanted text-to-image to do since the technology first hit in the summer of 2022. The only downside is that it’s pretty slow (but it’s much faster than a human would be!)

In this post, I’ll cover how this works and also what the implications are for content creators, marketing, and investors.

First, let me show you what this can do.

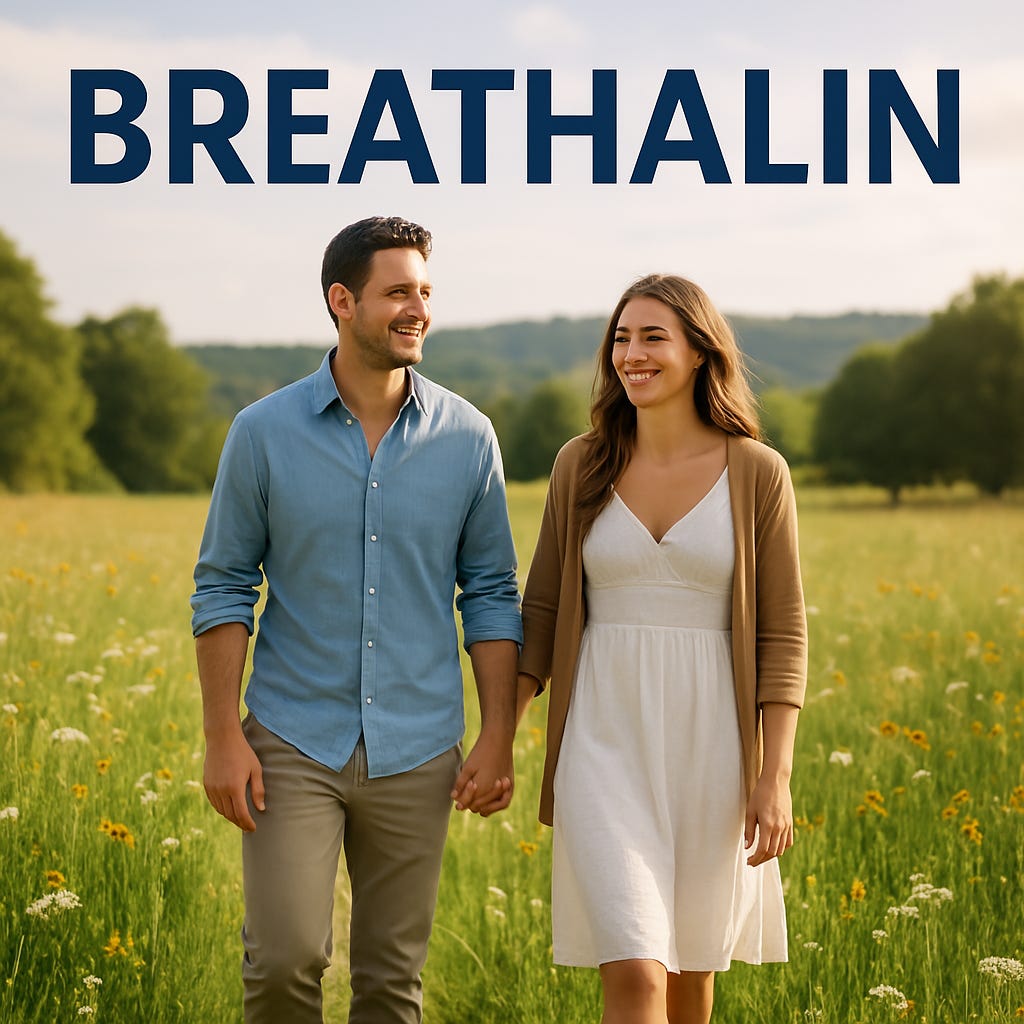

Breathe Easy

Say I’m designing a fictional ad campaign for a new allergy medication called Breathalin. The prompt was “Make an image for a new allergy medicine ad campaign. The medicine is called Breathalin and the name should be displayed prominently. Show a photo realistic couple strolling through a meadow”

What I get is clean and professional—legible text, realistic humans, and none of the usual weird text artifacts.

Now compare that to what the old model generates from the same prompt: a cartoon-style image, with random words and non-words placed under the drug name.

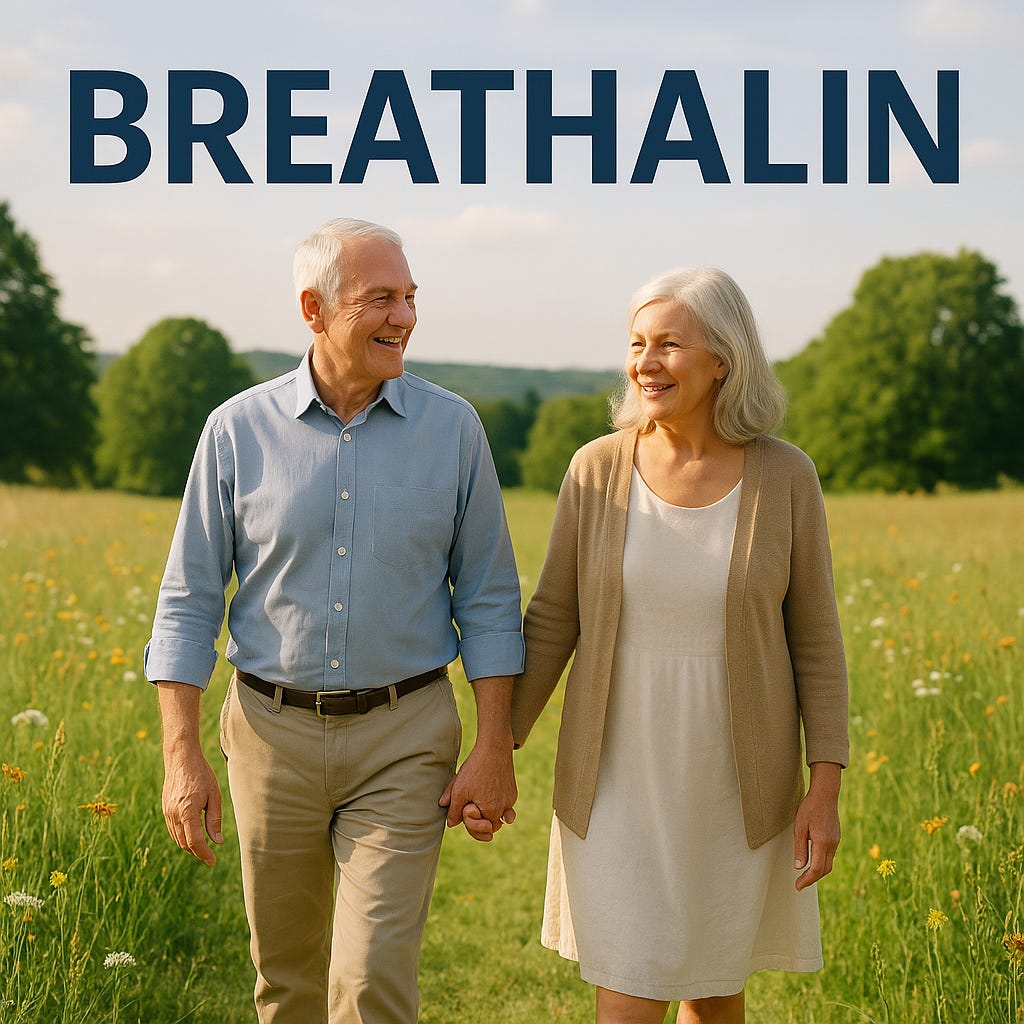

And when I ask the new model for the couple to be replaced with an older couple? The model barely changed anything—it essentially reused the ad and just changed the people.

This is a huge deal because it means you could have a bot that personalizes an ad based on who sees it without having to worry that it will ad nonsense words or significantly change the presentation. Finally, I asked it to add a tagline, “Now you can breathe easy even in allergy season.”2

It worked perfectly. You can see that the couple changed slightly. It did generate a new image, but the change is negligible. If you’ve already had the image approved by legal, and then you change the tagline, the new image will not be different enough to require new approval in most cases.

This is a huge leap forward!

So, how does this work?

In previous versions, ChatGPT didn’t actually generate images using the 4o model. Instead, it would take your request, craft a prompt behind the scenes, and send that to another model called DALL-E. DALL-E is a diffusion model, meaning it starts with random noise and gradually refines it into a coherent image. That’s a clever approach that has gotten us quite far, but it has limitations—especially when it comes to things like precise text rendering or iterative edits. Every AI image model out there today is a diffusion model.

The new model, however, generates images natively. That means it’s directly creating visuals from your request. This gives you more control, better feedback loops, and significantly more accurate outputs. Want to add a logo, adjust lighting, or change a facial expression? It understands and applies the change just in that area because the model “understands” the image.

One thing to note: images now take quite a bit longer to generate. You’ll notice a blurry preview pops up before the final image comes into focus. That gives you a sense that it’s using a different approach. This means that in advertising, you would need to have the model pre-render different versions of the ad and then load them in at the right moment, which wouldn’t be particularly difficult.

What This Means

In short: this could redefine how advertising and content creation works. Until now, AI-generated images were mostly used for basic mockups or inspiration boards as they weren’t reliable enough to produce finished content. But now, we’re entering a world where a business can create full campaigns—photorealistic, on-brand, and editable—all through natural language prompts.

And here’s where it gets really interesting: these campaigns can be personalized at scale. Imagine an e-commerce company running an email campaign. Instead of sending everyone the same image, they could tailor it to reflect the recipient’s age, location, lifestyle, or preferences—automatically. Just as in the advertising example, the people or products in the ad could be customized to specific ages and demographics. I get an ad with two boys, someone else gets an ad with a boy and a girl. This kind of hyper-targeted creative used to be prohibitively expensive and time-consuming. Now it’s doable with this type of model.

That extra personalization will lead to higher ROI, at least for a while. More relevant visuals generally lead to better engagement, and better engagement drives performance. Expect to see more personalized ads landing in your inbox, on your feed, and across the internet.

For agencies and creative studios, the shift could be a mixed bag. On the one hand, this technology dramatically increases production speed. Teams can iterate faster, test more variations, and deliver work to clients with turnaround times of hours rather than days. On the other hand, some clients may decide to generate their own creative once they have a campaign idea. Agencies will need to lean more heavily into concept development, storytelling, and strategy – places where AI can help humans today but is far from replacing them.

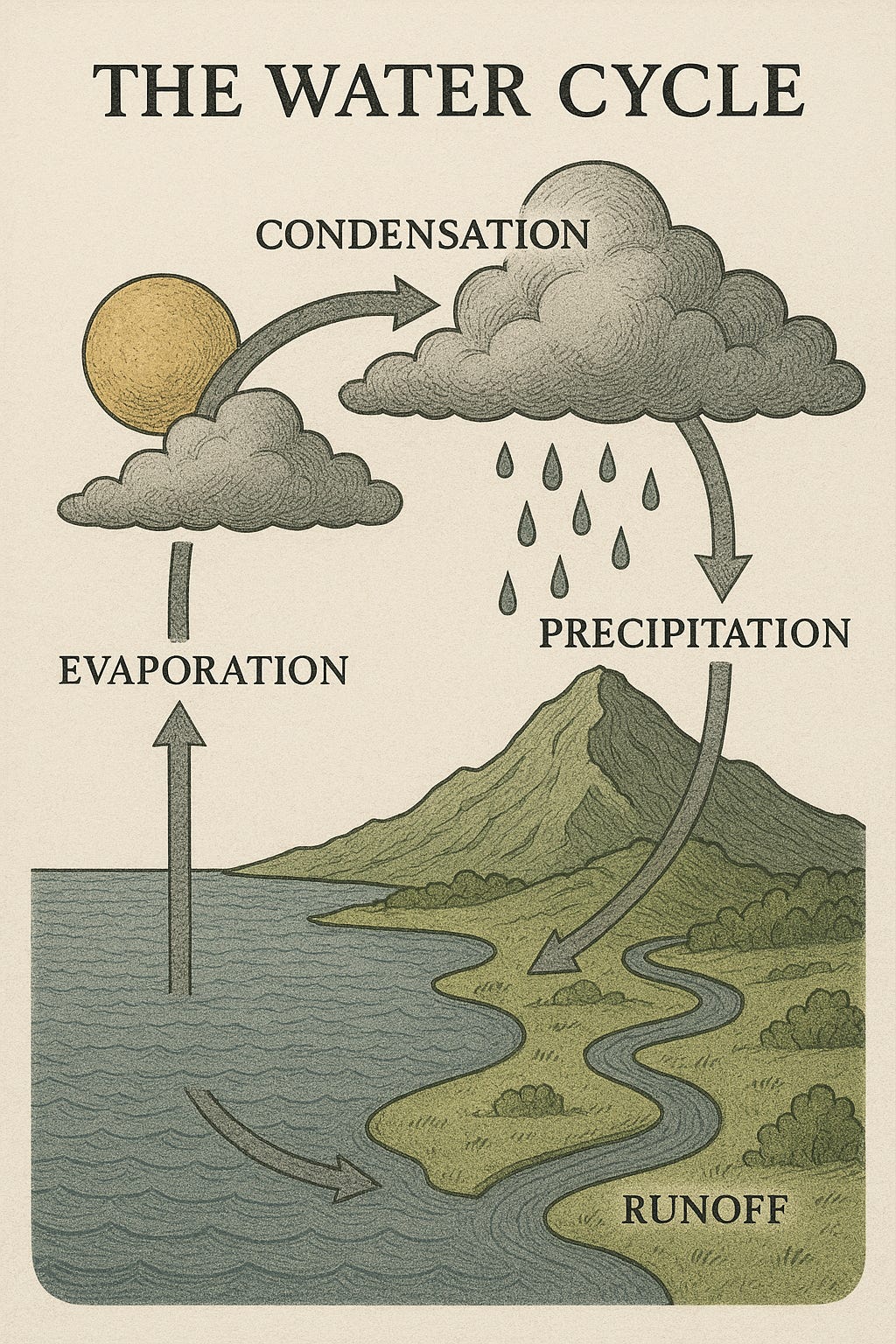

For other types of content creators, the impact might be more straightforwardly positive: faster production and better margins. If you want a diagram for your textbook that explains the water cycle. Here it is.3

This is a big leap forward, and it’s likely just the beginning. Expect to see more of these non-diffusion (or hybrid) models emerging from other AI players soon.4 And expect the amount of AI-generated content in your digital environment—from emails to ads to infographics—to skyrocket in the months ahead.

We’re entering the promised land for text-to-image. It will be exciting to see what people do with it.

Sigh. Yet another example of terrible AI naming. This would’ve been a great moment to change the model name. Even going from 4o to 4g or 4p or something would’ve made it clear that the capabilities are radically improved.

I chose this unwieldy text because I’ve found in the past that the longer the text, the harder it is for the model to reproduce it properly. I’m not saying this is a great tagline.

Full disclosure: this example actually turned out to be a little harder than I thought. When I tried to make some tweaks to this, it wasn’t quite as seamless as in the previous example but still much better than before

I’m really curious to see how long it will take. Previous breakthroughs from OpenAI have been matched in a few months. Will this be as quick or is this new type of model harder to imitate? I’d bet on less than 3 months, but I could be wrong.